A surface-level look at the world of note-taking apps, what the impact of "AI" may be, and why the much-lauded benefits of these tools never really seem to materialise. Ironically, I found the article as it's currently the most-read recent piece on Readwise, a tool explicitly designed as a note-taking app, which may not be the best indication of the benefits its users are feeling (or not feeling, as this would indicate 😅).

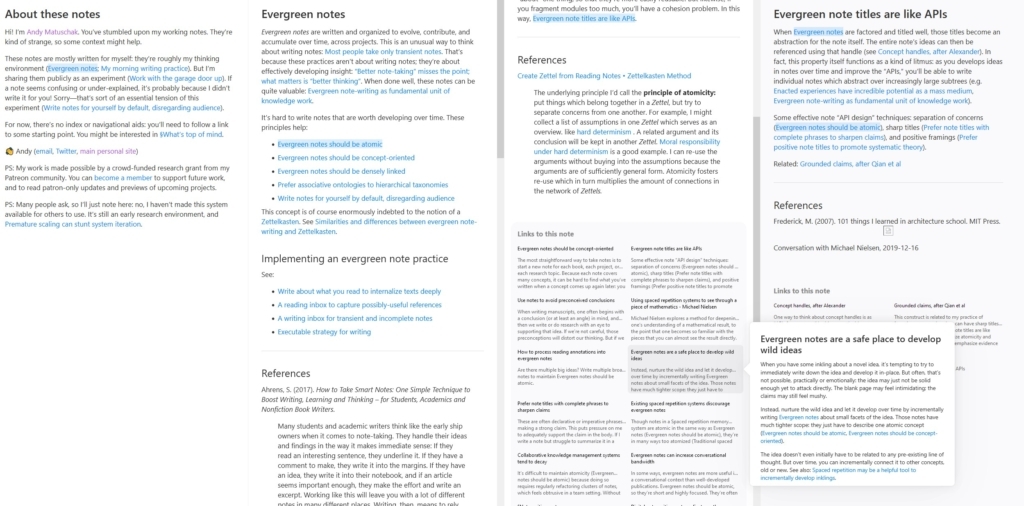

I agree with Casey's experience around note-taking, in that simply building a database of neatly back-linked and tagged information does not make for improved recall or understanding, but I find it funny that the single most successful tool for that very endeavour is rarely discussed in these circles: wikis. Wikipedia has managed to curate and interlink a vast amount of humanity's knowledge, and it is used by researchers and the general public all over the world for learning purposes. Personal wikis have similar capabilities (yes I am aware of the obvious bias as I write this on my personal wiki). Yet they rarely get a mention alongside tools such as Obsidian or Roam which, whilst incredible in their own way, maybe shouldn't be the final resting place for that very reason. A wiki encourages a certain level of self-curation and expansion. Though, I suppose, there is also nothing stopping people from using Obsidian like that, either.

Still, I definitely think the points here about AI are valid: if there is one area AI will come to dominate it is information retrieval and summarisation.

On the pros and cons of having all of human history and creative output at your fingertips:

Collectively, this material offers me an abundance of riches — far more to work with than any beat reporter had such easy access to even 15 years ago.

And yet most days I find myself with the same problem as the farmer: I have so much information at hand that I feel paralyzed.

On one of the few real benefits I feel AI will serve – recall:

An AI-powered link database has a perfect memory; all it’s missing is a usable chat interface. If it had one, it might be a perfect research assistant.

On the power of AI as a tool for bubbling ideas up from an archive:

But if I could chat in natural language with a massive archive, built from hand-picked trustworthy sources? That seems powerful to me, at least in the abstract.

On the problem with hallucinations and lack of citations:

A significant problem with using AI tools to summarize things is that you can’t trust the summary unless you read all the relevant documents yourself — defeating the point of asking for a summary in the first place.

On the issue with most note-taking software, how it abstracts away the central purpose (questioning your own knowledge); and also just really like "transient strings of text" bit:

I’ll admit to having forgotten those questions over the past couple years as I kept filling up documents with transient strings of text inside expensive software.as the farmer: I have so much information at hand that I feel paralyzed.