Josh's custom CSS reset | Josh W. Comeau

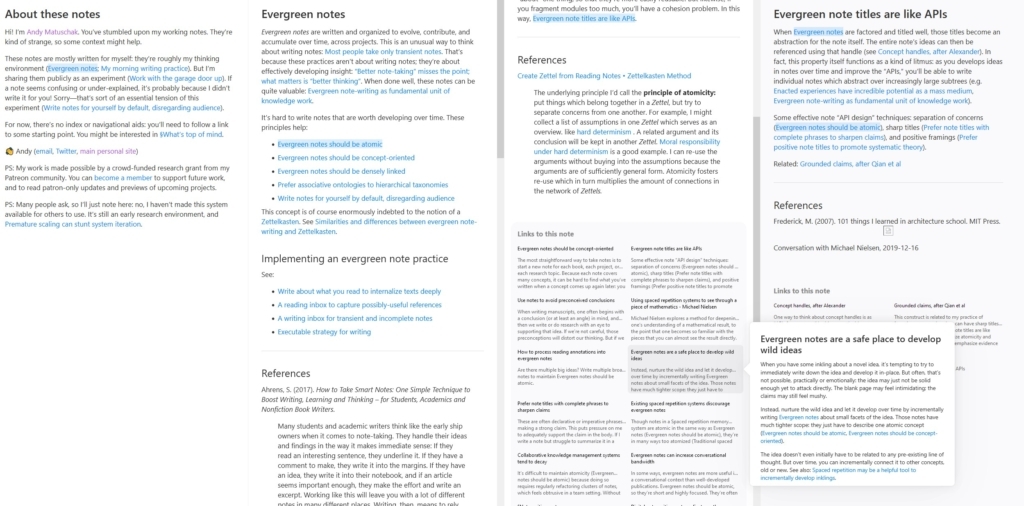

Josh has added some additional thoughts to Andy's CSS reset. Personally, I like a combination of the two (with a dash of Stephanie's best practices thrown in for good measure), but wanted to capture both for posterity.

On the grandfather of CSS resets by Eric Meyer (which has been my go-to for a while now as well):

For a long time, I used Eric Meyer's famous CSS Reset. It's a solid chunk of CSS, but it's a bit long in the tooth at this point; it hasn't been updated in more than a decade, and a lot has changed since then!

On why images should be block-level rather than inline:

Typically, I treat images the same way I treat paragraphs or headers or sidebars; they're layout elements.

By setting display: block on all images by default, we sidestep a whole category of funky issues.

On a more sensible default for interactive element font styles. Also TIL about the font shorthand property – very clever:

If we want to avoid this auto-zoom behavior, the inputs need to have a font-size of at least 1rem / 16px.

This fixes the auto-zoom issue, but it's a band-aid. Let's address the root cause instead: form inputs shouldn't have their own typographical styles!

fontis a rarely-used shorthand that sets a bunch of font-related properties, likefont-size,font-weight, andfont-family.

And the reset itself:

/*

Josh's Custom CSS Reset

https://www.joshwcomeau.com/css/custom-css-reset/

*/

*, *::before, *::after {

box-sizing: border-box;

}

* {

margin: 0;

}

body {

line-height: 1.5;

-webkit-font-smoothing: antialiased;

}

img, picture, video, canvas, svg {

display: block;

max-width: 100%;

}

input, button, textarea, select {

font: inherit;

}

p, h1, h2, h3, h4, h5, h6 {

overflow-wrap: break-word;

}

#root, #__next {

isolation: isolate;

}